I wanted to describe how this website is built. My blog is static page generated by excellent tool called Hugo which makes it serverless solution. It is already second version of static blog, the previous version was built with Gatsby. But since I am a big fan of Go programming language and the build time of Gatsby website was high, I decided to migrate to Hugo.

S3, CloudFront & Lambda@Edge

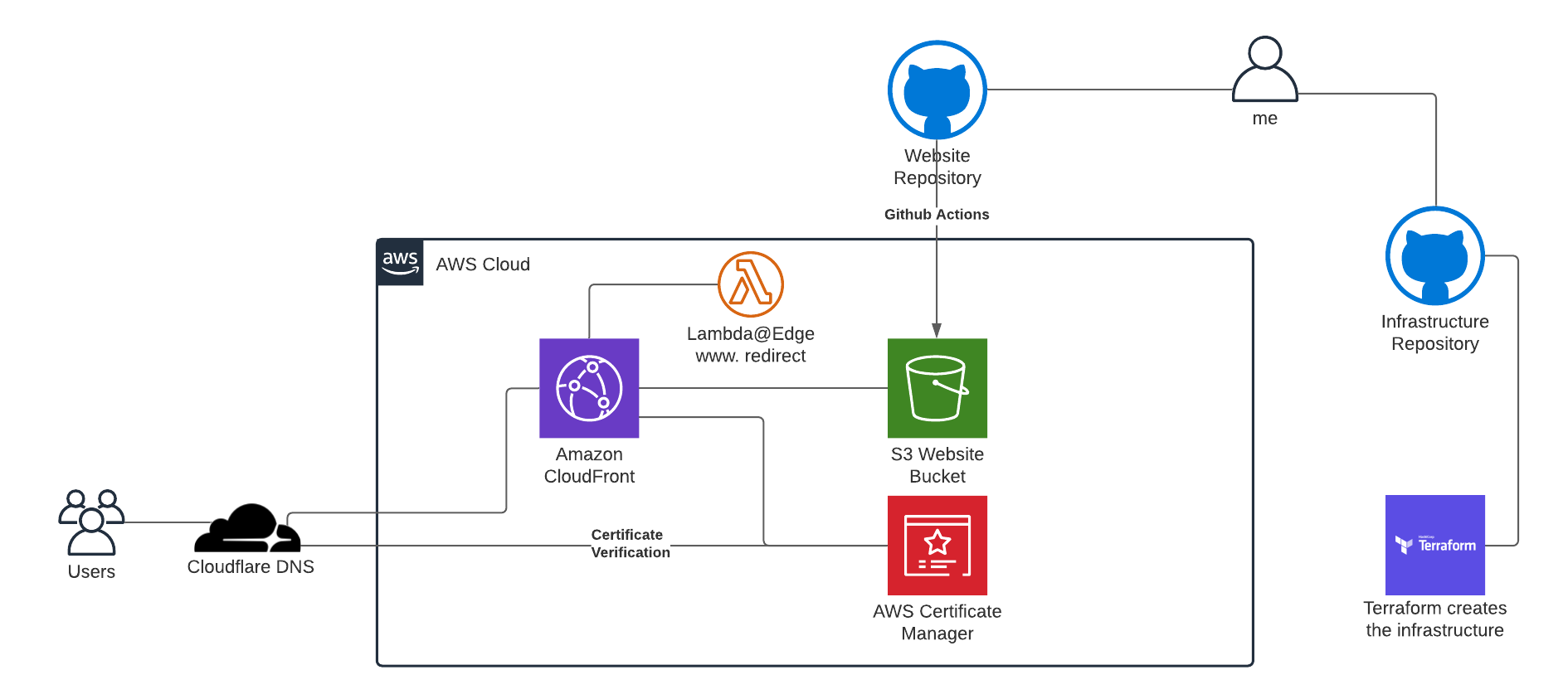

Here you can see the architecture of the website. Basically everything starts with S3 bucket containing all the generated files by Hugo. Then the website is served by CloudFront as CDN. You can also see a small Lambda@Edge function which redirects request without www. to www.piotrbelina.com. The code for this Lambda is simple

exports.handler = async (event) => {

// (1)

const request = event.Records[0].cf.request;

// (2)

if (request.headers.host[0].value === 'piotrbelina.com') {

// (3)

return {

status: '301',

statusDescription: `Redirecting to www domain`,

headers: {

location: [{

key: 'Location',

value: `https://www.piotrbelina.com${request.uri}`

}]

}

};

}

// (4)

return request;

};

Terraform code for this CloudFront & Lambda deployment looks like that.

data "archive_file" "redirect" {

type = "zip"

source_file = "${path.module}/redirect.js"

output_path = "${path.module}/redirect.zip"

}

resource "aws_lambda_function" "redirect" {

filename = data.archive_file.redirect.output_path

function_name = "redirect"

role = aws_iam_role.lambda_at_edge.arn

handler = "redirect.handler"

# The filebase64sha256() function is available in Terraform 0.11.12 and later

# For Terraform 0.11.11 and earlier, use the base64sha256() function and the file() function:

source_code_hash = data.archive_file.redirect.output_base64sha256

runtime = "nodejs12.x"

publish = true

provider = aws.east

}

/**

* Policy to allow AWS to access this lambda function.

*/

data "aws_iam_policy_document" "assume_role_policy_doc" {

statement {

sid = "AllowAwsToAssumeRole"

effect = "Allow"

actions = ["sts:AssumeRole"]

principals {

type = "Service"

identifiers = [

"lambda.amazonaws.com",

"edgelambda.amazonaws.com",

]

}

}

}

/**

* Make a role that AWS services can assume that gives them access to invoke our function.

* This policy also has permissions to write logs to CloudWatch.

*/

resource "aws_iam_role" "lambda_at_edge" {

name = "redirect-role"

assume_role_policy = data.aws_iam_policy_document.assume_role_policy_doc.json

}

/**

* Allow lambda to write logs.

*/

data "aws_iam_policy_document" "lambda_logs_policy_doc" {

statement {

effect = "Allow"

resources = ["*"]

actions = [

"logs:CreateLogStream",

"logs:PutLogEvents",

# Lambda@Edge logs are logged into Log Groups in the region of the edge location

# that executes the code. Because of this, we need to allow the lambda role to create

# Log Groups in other regions

"logs:CreateLogGroup",

]

}

}

/**

* Attach the policy giving log write access to the IAM Role

*/

resource "aws_iam_role_policy" "logs_role_policy" {

name = "redirectat-edge"

role = aws_iam_role.lambda_at_edge.id

policy = data.aws_iam_policy_document.lambda_logs_policy_doc.json

}

/**

* Creates a Cloudwatch log group for this function to log to.

* With lambda@edge, only test runs will log to this group. All

* logs in production will be logged to a log group in the region

* of the CloudFront edge location handling the request.

*/

resource "aws_cloudwatch_log_group" "log_group" {

name = "/aws/lambda/redirect"

}

resource "aws_cloudfront_distribution" "www_distribution" {

origin {

custom_origin_config {

http_port = "80"

https_port = "443"

origin_protocol_policy = "http-only"

origin_ssl_protocols = ["TLSv1", "TLSv1.1", "TLSv1.2"]

}

domain_name = aws_s3_bucket.wwwpiotrbelinacom.website_endpoint

origin_id = var.www_domain_name

}

logging_config {

include_cookies = false

bucket = aws_s3_bucket.piotrbelinacom_logs.bucket_domain_name

}

// All values are defaults from the AWS console.

default_cache_behavior {

viewer_protocol_policy = "redirect-to-https"

compress = true

allowed_methods = ["GET", "HEAD"]

cached_methods = ["GET", "HEAD"]

// This needs to match the `origin_id` above.

target_origin_id = var.www_domain_name

min_ttl = 0

default_ttl = 86400

max_ttl = 31536000

forwarded_values {

query_string = false

cookies {

forward = "none"

}

}

lambda_function_association {

event_type = "viewer-request"

lambda_arn = aws_lambda_function.redirect.qualified_arn

include_body = false

}

}

// Here we're ensuring we can hit this distribution using www.piotrbelina.com

// rather than the domain name CloudFront gives us.

aliases = [var.www_domain_name, var.root_domain_name]

restrictions {

geo_restriction {

restriction_type = "none"

}

}

// Here's where our certificate is loaded in!

viewer_certificate {

acm_certificate_arn = aws_acm_certificate.certificate.arn

ssl_support_method = "sni-only"

}

price_class = "PriceClass_100"

enabled = true

default_root_object = "index.html"

}

CloudFlare DNS, ACM & HTTPS

DNS is managed with CloudFlare. It means that piotr.belina.com CNAME DNS record has to be set to CloudFront distribution address. Also a ACM certificate validation token has to be set in DNS.

resource "cloudflare_record" "root" {

zone_id = var.cloudflare_zone_id

name = ""

value = aws_cloudfront_distribution.www_distribution.domain_name

type = "CNAME"

proxied = true

}

resource "aws_acm_certificate" "certificate" {

provider = aws.east

domain_name = "*.${var.root_domain_name}"

validation_method = "DNS"

subject_alternative_names = [var.root_domain_name]

lifecycle {

create_before_destroy = true

}

}

data "cloudflare_zones" "this" {

filter {

name = "piotrbelina.com"

status = "active"

paused = false

}

}

locals {

cert_validation_record = element(tolist(aws_acm_certificate.certificate.domain_validation_options), 0)

}

resource "cloudflare_record" "cert_validation" {

name = local.cert_validation_record.resource_record_name

value = local.cert_validation_record.resource_record_value

type = local.cert_validation_record.resource_record_type

zone_id = data.cloudflare_zones.this.zones[0].id

ttl = 3600

proxied = false

depends_on = [

aws_acm_certificate.certificate

]

lifecycle {

ignore_changes = [value]

}

}

Conclusion

Basically all is needed to have a serverless blog site described in above. Of course you could use a different DNS provider like AWS Route 53 or anything else. The principles remain the same. In next post I will describe how to implement Continuous Deployment with GitHub Actions.